Exploring Markov Decision Processes: A Comprehensive Survey of Optimization Applications and Techniques

Harnessing the Power of Markov Decision Processes in Machine Learning and Robotics

Machine Learning and Robotics are reshaping industries by integrating intelligent decision-making algorithms into systems that require adaptability and optimization. A pivotal tool in this transformation is the Markov Decision Process (MDP), a dynamic programming model widely used for solving optimization problems across multiple domains.

This blog explores the recent comprehensive study on MDP applications, emphasizing its contributions to Machine Learning and Robotics, and delves into its potential to revolutionize decision-making in dynamic environments.

Introduction to Markov Decision Processes

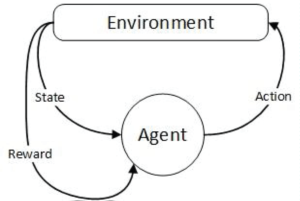

The Markov Decision Process is a mathematical framework for modeling sequential decision-making in environments where outcomes are partly random and partly under the control of a decision-maker. Defined by four components: states (S), actions (A), transition probabilities (P), and rewards (R), MDP helps determine the optimal policy for maximizing rewards over time.

Key attributes of MDP include:

- Dynamic Nature: Adjusts to real-time changes in the environment.

- Sequential Decision-Making: Balances immediate and long-term rewards.

- Probabilistic Modeling: Manages uncertainty in outcomes.

Applications in Machine Learning and Robotics

1. Reinforcement Learning

Reinforcement learning, a subset of Machine Learning, extensively uses MDP for training intelligent agents. By defining states, actions, and rewards, MDP enables agents to learn optimal policies through trial and error. Applications include:

- Autonomous navigation in robotics.

- Game strategy development.

- Real-time adaptive control systems.

2. Robotics Decision-Making

In Robotics, MDP aids in high-stakes decision-making, such as:

- Path planning for autonomous vehicles.

- Manipulating robotic arms in dynamic workspaces.

- Optimizing energy usage in robotic operations.

MDP’s ability to model uncertainties makes it invaluable in robotics, where unexpected scenarios are frequent.

3. Fault Detection and Maintenance

Figure: Working Flow of MDP

Figure: Working Flow of MDP

MDP is employed in predictive maintenance of robots, enabling:

- Early detection of component wear.

- Optimal scheduling of maintenance activities.

- Minimizing operational downtime while extending system lifespan.

Innovations in Optimization Techniques

The study highlights several advanced optimization techniques leveraging MDP:

- Regularized MDPs:

Incorporate constraints like safety and cost-efficiency, improving decision-making in critical applications such as healthcare and autonomous systems. - Hierarchical Reinforcement Learning:

Divides complex tasks into sub-tasks, each modeled as an MDP, simplifying problem-solving in robotics. - Deep Reinforcement Learning with MDPs:

Combines neural networks with MDP frameworks to handle large state spaces, crucial for applications like self-driving cars and robotics.

Challenges in Markov Decision Processes

Despite its versatility, MDP faces challenges:

- Scalability: Handling large state-action spaces requires significant computational resources.

- Transition Uncertainty: Modeling accurate transition probabilities in dynamic environments is complex.

- Explainability: MDP-based models often lack transparency, making them difficult to interpret for critical applications.

Case Studies Highlighting MDP Applications

1. Autonomous Vehicles

MDPs are critical in enabling decision-making for autonomous vehicles:

- Managing lane changes and obstacle avoidance.

- Optimizing fuel efficiency through adaptive control.

- Enhancing safety in unpredictable traffic scenarios.

2. Agricultural Optimization

In precision agriculture, MDP aids in:

- Optimizing irrigation schedules based on soil and weather data.

- Allocating resources like fertilizers efficiently.

- Predicting crop yield and planning harvesting activities.

3. Energy Management in Robotics

Robots operating on limited power sources benefit from MDP-based energy management systems that balance task completion with energy conservation.

Future Directions in Machine Learning and Robotics

The synergy between Machine Learning and Robotics and MDP is driving exciting advancements:

- Scalable MDP Models:

Research is focusing on algorithms that efficiently handle massive state-action spaces without compromising accuracy. - Integration with IoT:

IoT-enabled robots can use MDP for real-time decision-making, enhancing efficiency in smart factories and logistics. - Human-Robot Collaboration:

MDP models are being tailored to improve interactions between robots and humans, ensuring safety and productivity. - Sustainable Robotics:

By integrating MDP with sustainability goals, robots can minimize energy use and environmental impact during operations.

Conclusion

Markov Decision Processes stand at the intersection of Machine Learning and Robotics, offering powerful tools to optimize decision-making in complex, uncertain environments. From autonomous navigation to predictive maintenance, MDP’s versatility ensures its relevance across industries.

As research progresses, innovations in MDP modeling and its integration with emerging technologies promise to unlock new possibilities, reinforcing its role as a cornerstone of intelligent systems. The collaboration between Machine Learning and Robotics with MDP will continue to shape the future of technology, making systems smarter, safer, and more efficient.

References

- Bharati M, Singh V, Kripal R. Modeling of Cr³⁺ doped Cassiterite (SnO₂) Single Crystals. HTML | PDF.

- DOI: 10.61927/igmin210.

FAQs

- What is a Markov Decision Process (MDP)?

A Markov Decision Process (MDP) is a mathematical framework used for modeling decision-making in environments with uncertainty, defined by states, actions, transition probabilities, and rewards. - How are MDPs used in Machine Learning and Robotics?

MDPs are used in reinforcement learning to train agents, optimize path planning, manage energy usage, and enhance decision-making in dynamic and uncertain robotic environments. - What are the main challenges of using MDPs?

Key challenges include scalability in handling large state-action spaces, accurately modeling transition probabilities, and improving the explainability of MDP-based solutions. - What innovations in MDP are highlighted in the study?

Innovations include regularized MDPs for constraint handling, hierarchical reinforcement learning for complex task decomposition, and deep reinforcement learning to address large state spaces. - What industries benefit from MDP applications?

MDPs are widely applied in autonomous vehicles, precision agriculture, energy-efficient robotics, predictive maintenance, finance, and healthcare, providing optimized decision-making in various domains.